Learn how to optimize your SEO with the right use of robots.txt files using Teasoft’s simple generator tool.

Robots.txt files are essential for guiding search engine crawlers to index the right pages and avoid unnecessary ones. In this blog, we delve into the importance of properly managing your robots.txt file to enhance your SEO strategy. We also introduce Teasoft’s easy-to-use robots.txt generator, a tool that simplifies the process of creating and managing your file, ensuring that search engines focus on the most important pages. Follow best practices and ensure that your website’s content is properly indexed for maximum search engine visibility.

A well-structured robots.txt file is an essential component of any successful SEO strategy. It serves as a guide for search engine crawlers, telling them which pages of your website to index and which ones to avoid. When properly optimized, it can enhance your site's SEO performance, ensuring that search engines focus on the most valuable pages while preventing indexing of duplicate or low-value content. However, a poorly configured robots.txt file can harm your site's visibility and cause search engines to overlook key pages. Understanding how to create and manage robots.txt is therefore crucial for every website owner and SEO professional looking to improve their site’s search engine rankings.

At Teasoft, our Robots.txt Generator makes it easier than ever to create a properly optimized robots.txt file. Whether you are new to SEO or a seasoned expert, our tool offers a simple, user-friendly way to generate a robots.txt file tailored to your specific needs. By understanding the importance of this file and how it affects your website’s indexing, you can make informed decisions about how to manage your site's visibility on search engines.

What is a Robots.txt File?

The robots.txt file is a simple text file that tells search engine crawlers (like Googlebot) which pages or sections of your website should or should not be crawled and indexed. It plays a crucial role in the world of SEO by controlling how search engines interact with your website’s content. Without a properly configured robots.txt file, search engines might waste time crawling irrelevant pages or even index pages that you don’t want to appear in search results.

This file is typically placed in the root directory of your website and follows a specific syntax. Search engine crawlers, when visiting your site, first check the robots.txt file to determine the rules and guidelines for crawling and indexing. The directives in the file help search engines know which parts of the site to focus on and which parts to avoid, giving you control over your website’s search engine performance.

Why is Robots.txt Important for SEO?

Optimizing your robots.txt file for SEO is important because it directly influences which pages search engines can crawl and index. If used correctly, it can significantly improve your site’s SEO performance. For example, if you have duplicate content, you can use robots.txt to block search engines from crawling those pages to avoid being penalized for content duplication. Similarly, if you have private or sensitive pages, you can ensure that these are kept out of search results by blocking crawlers from indexing them.

On the other hand, if misused, robots.txt can harm your website’s SEO efforts. For instance, blocking crawlers from indexing important content, such as product pages or blog posts, can prevent those pages from appearing in search results. This will limit your site’s visibility and reduce organic traffic. Therefore, understanding how to manage your robots.txt file properly is crucial to maximizing your website’s SEO potential.

Creating a Robots.txt File

Creating a robots.txt file is relatively straightforward. The first step is to understand your website's structure and identify the pages that should be indexed or blocked from crawling. Typically, you would want search engines to crawl and index your most valuable pages, such as your homepage, blog posts, and product pages, while blocking irrelevant pages like admin panels, login pages, and duplicate content.

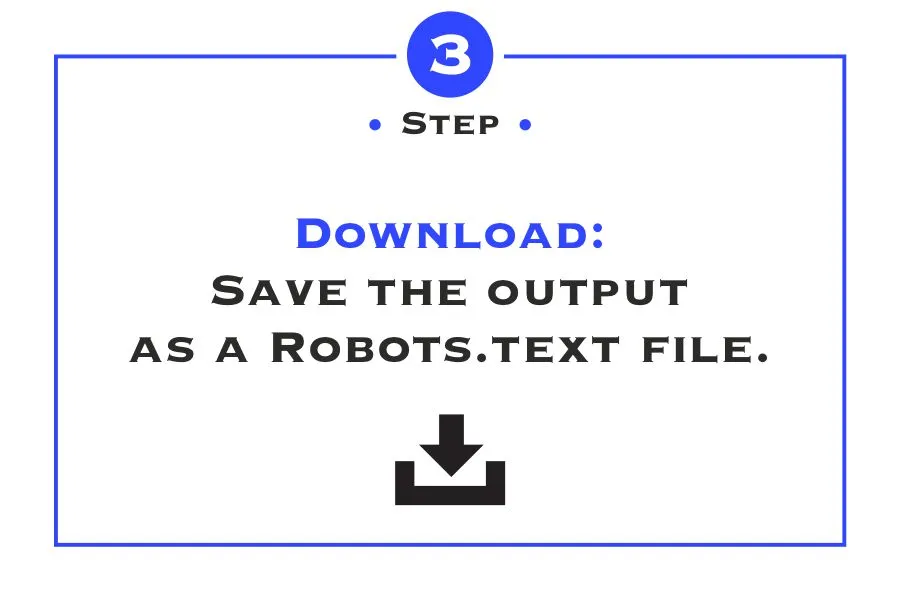

Once you have a clear understanding of what you want to allow or disallow, you can begin generating the file. If you’re comfortable with editing raw text, you can create the file manually by writing the appropriate directives. However, if you prefer a more automated approach, tools like the Teasoft Robots.txt Generator allow you to generate the file with ease. Our tool is designed to help you create the file quickly by simply selecting the pages you want to allow or disallow from being indexed. After generating the file, you can download it and upload it to the root directory of your website.

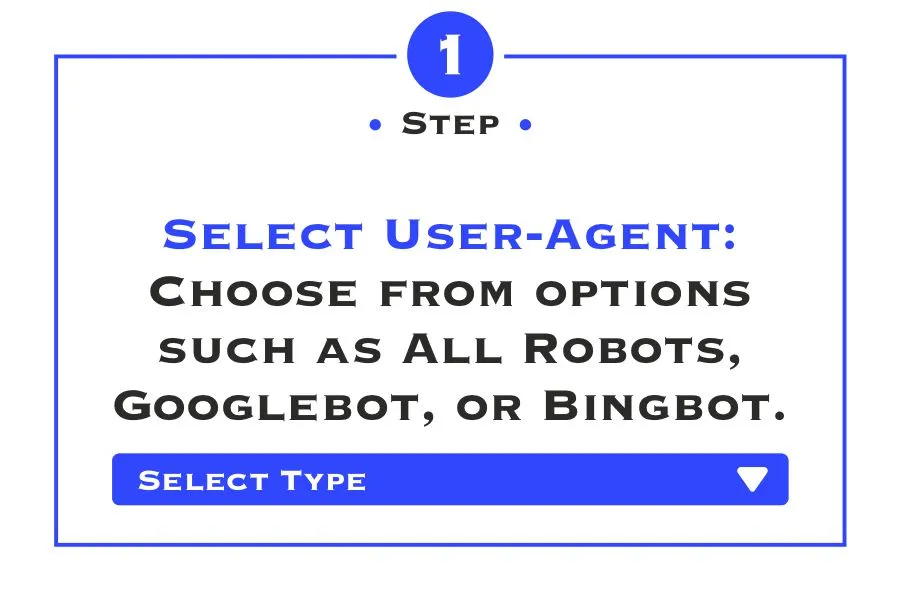

How to Use the Teasoft Robots.txt Generator

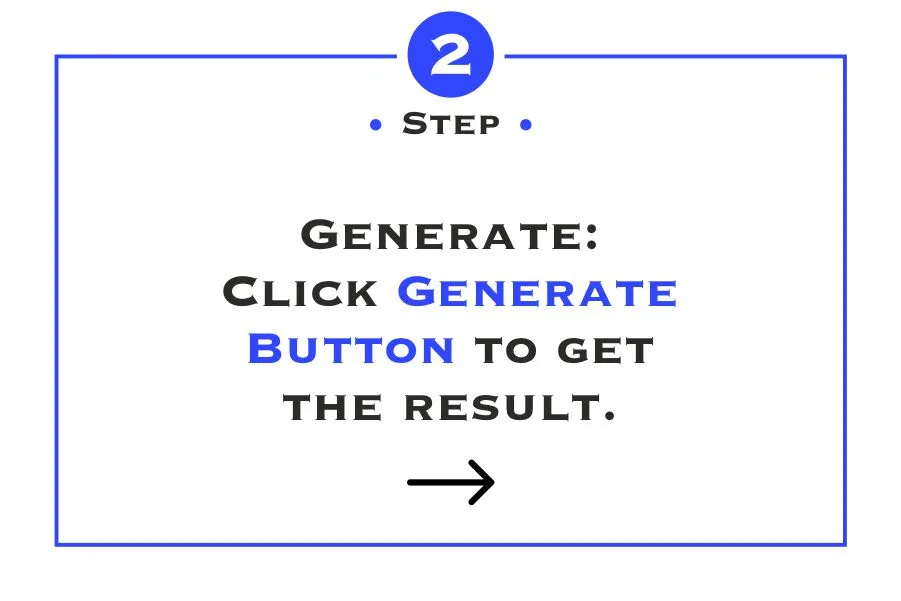

Using the Teasoft Robots.txt Generator is simple and easy. First, visit the Robots.txt Generator page. You will be presented with an interface where you can input the specific pages and directories of your site that you want to allow or block from crawling. Based on your preferences, the generator will create a custom robots.txt file for you.

Once you have selected your preferences, the generator will automatically populate the robots.txt file with the correct syntax. Afterward, you can download the file to your computer and upload it to the root directory of your website. The file will then be automatically recognized by search engine crawlers, and the rules will be enforced immediately.

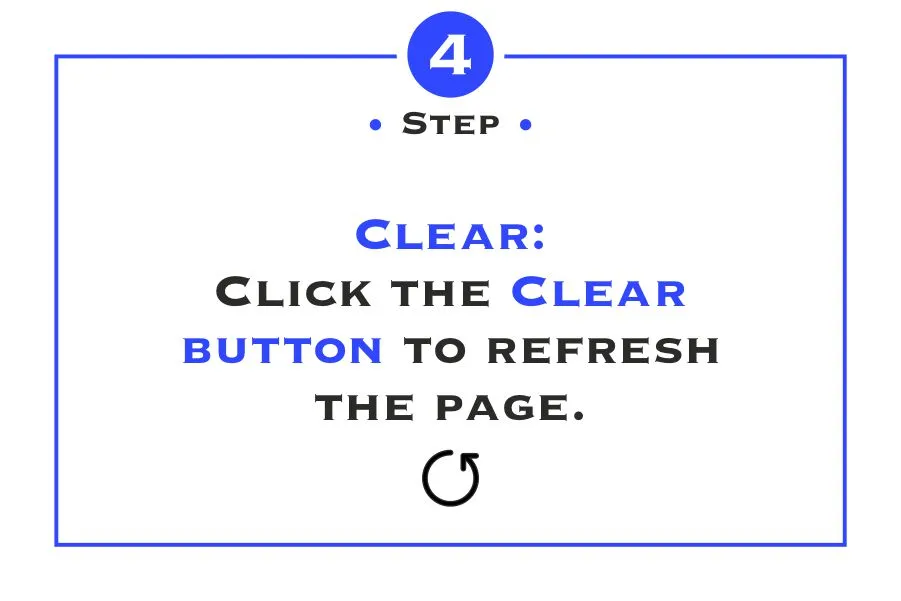

This process is efficient and user-friendly, making it easy for website owners to manage their site's SEO without needing any coding skills. Whether you’re a beginner or a seasoned SEO professional, the Teasoft Robots.txt Generator makes it easy to create and manage your robots.txt file with just a few clicks.

Testing and Verifying Your Robots.txt File

Once you have created and uploaded your robots.txt file, it’s important to test and verify that it is functioning correctly. Search engine crawlers need to be able to access and read your robots.txt file to enforce the directives you have set. You can use Google’s Robots.txt Tester or other similar tools to check whether your file is working as intended.

In the case of any errors, the tool will notify you and provide suggestions for how to fix the issue. Verifying your robots.txt file ensures that it is not blocking important content or allowing unwanted pages to be indexed. Regularly testing your robots.txt file is a good practice to ensure that it continues to serve your SEO goals and doesn’t inadvertently cause any indexing problems.

Conclusion: Master Your Robots.txt for Better SEO

In conclusion, the robots.txt file plays a vital role in controlling how search engines interact with your website. When used correctly, it helps ensure that your valuable content is indexed while blocking unwanted or low-value pages from appearing in search results. With Teasoft’s Robots.txt Generator, creating and managing your robots.txt file has never been easier. By following best practices and regularly testing your file, you can ensure that your website remains optimized for SEO and that search engines focus on the pages that matter most to your business.

Website Builder

Discover our HTML Website Builder for dynamic, customizable layouts with interactive elements and precise content control.

website builder

Paragraph Generator

Boost writing productivity with our Paragraph Generator, offering customized, algorithm-driven text for diverse needs.

paragraph generator

Convert Case

Transform your text instantly with Convert Case for seamless, high-quality format changes.

convert case

PDF Converter

Convert JPG, PNG, and WebP to PDF effortlessly with our tool, ensuring high-quality results in an instant.

pdf converter

Image Tool

Use our Image Tool to convert your images now, enjoying seamless, high-quality format transformations.

image tool

Code Formatter

Enhance code clarity with our Formatter tool, ensuring instant, high-quality format changes seamlessly.

code formatter

Logo Maker

Create professional logos for your business and website with Logo Maker: 131+ fonts, unique designs, and free PNG downloads.

logo maker

Photo Editor

Free Online Photo Editor: Upload images (.png, .jpg, .webp, .avif) to resize, crop, flip, apply filters, reset edits, and download—ideal for all users.

photo editor

Name Generator

Create the perfect name for your business, brand, domain, or project instantly with our Name Generator.

name generator

Random Generator

Instantly generate passwords, numbers, keys, tokens, and more with our Random Generator.

random generator

Word Counting Tool

Instantly generate passwords, numbers, keys, tokens, and more with our Random Generator.

word counting tool

QR Code Generator

Instantly generate passwords, numbers, keys, tokens, and more with our Random Generator.

qr code generator

Text Editor

Instantly generate passwords, numbers, keys, tokens, and more with our Random Generator.

text editor

Bar Code Generator

Instantly generate passwords, numbers, keys, tokens, and more with our Random Generator.

bar code generator

Seo Tool

Leverage our SEO Tool to analyze, optimize, and improve your website’s visibility.

seo tool

Text Formatter

Use Text Formatter tool to bold, italicize, and underline text for social media. Copy, paste, and post!

text formatter

Calculators

Simplify life with our Age Calculator, Loan Repayment Calculator, Tax Refund Estimator, and BMI Calculator—your go-to tools for quick results!

calculators